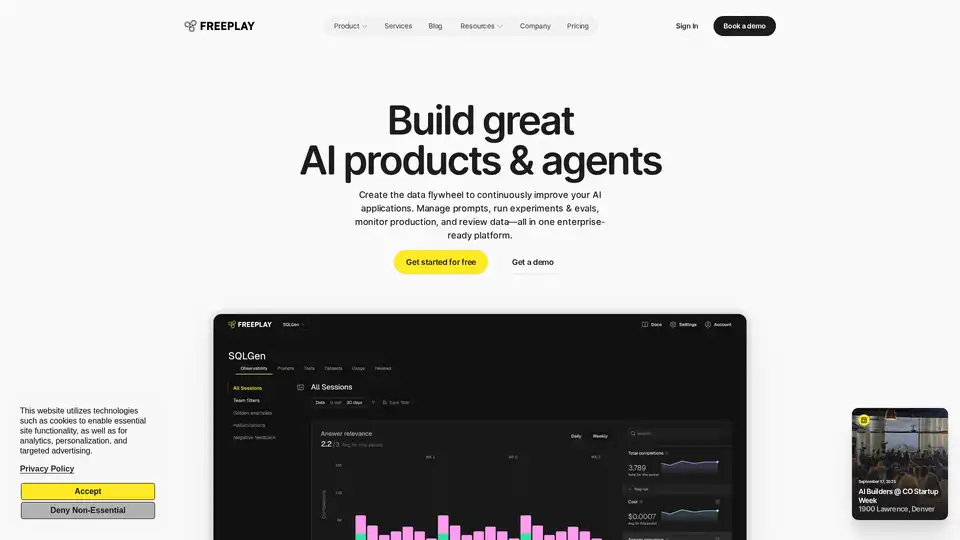

Freeplay

Overview of Freeplay

What is Freeplay?

Freeplay is an AI evals and observability platform designed to help AI teams build better products faster. It focuses on creating a data flywheel where continuous improvement is driven by evaluations, experiments, and data review workflows. It's an enterprise-ready platform that streamlines the process of managing prompts, running experiments, monitoring production, and reviewing data, all in one place.

How does Freeplay work?

Freeplay works by providing a unified platform for various stages of AI product development:

- Prompt & Model Management: Enables versioning and deploying prompt and model changes, similar to feature flags, for rigorous experimentation.

- Evaluations: Allows the creation and tuning of custom evaluations that measure quality specific to the AI product.

- LLM Observability: Offers instant search to find and review any LLM interaction, from development to production.

- Batch Tests & Experiments: Simplifies launching tests and measuring the impact of changes to prompts and agent pipelines.

- Auto-Evals: Automates the execution of test suites for both testing and production monitoring.

- Production Monitoring & Alerts: Uses evaluations and customer feedback to catch issues and gain actionable insights from production data.

- Data Review & Labeling: Provides multi-player workflows to analyze, label data, identify patterns, and share learnings.

- Dataset Management: Turns production logs into test cases and golden sets for experimentation and fine-tuning.

Key Features and Benefits

- Streamlined AI Development: Consolidates tools and workflows to reduce the need to switch between different applications.

- Continuous Improvement: Creates a data flywheel that ensures AI products continuously improve based on data-driven insights.

- Enhanced Experimentation: Facilitates rigorous experimentation with prompt and model changes.

- Improved Product Quality: Enables the creation and tuning of custom evaluations to measure specific quality metrics.

- Actionable Insights: Provides production monitoring and alerts based on evaluations and customer feedback.

- Collaboration: Supports multi-player workflows for data review and labeling.

Why Choose Freeplay?

Several customer testimonials highlight the benefits of using Freeplay:

- Faster Iteration: Teams have experienced significant increases in their pace of iteration and efficiency of prompt improvements.

- Improved Confidence: Users can ship and iterate on AI features with confidence, knowing how changes will impact customers.

- Disciplined Workflow: Freeplay transforms what was once a black-box process into a testable and disciplined workflow.

- Easy Integration: The platform offers lightweight SDKs and APIs that integrate seamlessly with existing code.

Who is Freeplay for?

Freeplay is designed for:

- AI engineers and domain experts working on AI product development.

- Teams looking to streamline their AI development workflows.

- Companies that need to ensure the quality and continuous improvement of their AI products.

- Enterprises that require security, control, and expert support for their AI initiatives.

Practical Applications and Use Cases

- Building AI Agents: Helps in building production-grade AI agents with end-to-end agent evaluation and observability.

- Improving Customer Experience: Enables companies to nail the details with AI through intentional testing and iteration.

- Enhancing Prompt Engineering: Transforms prompt engineering into a disciplined, testable workflow.

How to use Freeplay?

- Sign Up: Start by signing up for a Freeplay account.

- Integrate SDKs: Integrate Freeplay's SDKs and APIs into your codebase.

- Manage Prompts: Use the prompt and model management features to version and deploy changes.

- Create Evaluations: Define custom evaluations to measure the quality of your AI product.

- Run Experiments: Launch tests and measure the impact of changes to prompts and agent pipelines.

- Monitor Production: Use production monitoring and alerts to catch issues and gain insights.

- Review Data: Analyze and label data using the multi-player workflows.

Is Freeplay Enterprise Ready?

Yes, Freeplay offers enterprise-level features, including:

- Security and Privacy: SOC 2 Type II & GDPR compliance with private hosting options.

- Access Control: Granular RBAC to control data access.

- Expert Support: Hands-on support, training, and strategy from experienced AI engineers.

- Integrations: API support and connectors to other systems for data portability and automation.

Freeplay is a robust platform that helps AI teams build better products faster by streamlining development workflows, ensuring continuous improvement, and providing the necessary tools for experimentation, evaluation, and observability. By creating a data flywheel, Freeplay empowers teams to iterate quickly and confidently on AI features, ultimately leading to higher quality AI products.

AI Task and Project Management AI Document Summarization and Reading AI Smart Search AI Data Analysis Automated Workflow

Best Alternative Tools to "Freeplay"

Athina is a collaborative AI platform that helps teams build, test, and monitor LLM-based features 10x faster. With tools for prompt management, evaluations, and observability, it ensures data privacy and supports custom models.

Parea AI is an AI experimentation and annotation platform that helps teams confidently ship LLM applications. It offers features for experiment tracking, observability, human review, and prompt deployment.

Parea AI is the ultimate experimentation and human annotation platform for AI teams, enabling seamless LLM evaluation, prompt testing, and production deployment to build reliable AI applications.

HoneyHive provides AI evaluation, testing, and observability tools for teams building LLM applications. It offers a unified LLMOps platform.