HoneyHive

Overview of HoneyHive

HoneyHive: The AI Observability and Evaluation Platform

What is HoneyHive? HoneyHive is a comprehensive AI observability and evaluation platform designed for teams building Large Language Model (LLM) applications. It provides a single, unified LLMOps platform to build, test, debug, and monitor AI agents, whether you're just getting started or scaling across an enterprise.

Key Features:

- Evaluation: Systematically measure AI quality with evals. Simulate your AI agent pre-deployment over large test suites to identify critical failures and regressions.

- Agent Observability: Get instant end-to-end visibility into your agent interactions with OpenTelemetry, and analyze the underlying logs to debug issues faster. Visualize agent steps with graph and timeline views.

- Monitoring & Alerting: Continuously monitor performance and quality metrics at every step - from retrieval and tool use, to reasoning, guardrails, and beyond. Get alerts over critical AI failures.

- Artifact Management: Collaborate with your team in UI or code. Manage prompts, tools, datasets, and evaluators in the cloud, synced between UI & code.

How to use HoneyHive?

- Evaluation: Define your test cases and evaluation metrics.

- Tracing: Ingest traces via OTel or REST APIs to monitor agent interactions.

- Observability: Use the dashboard and custom charts to track KPIs.

- Artifact Management: Manage and version prompts, datasets, and evaluators.

Why is HoneyHive important? HoneyHive allows you to:

- Improve AI agent capabilities.

- Seamlessly deploy them to thousands of users.

- Ensure quality and performance across AI agents.

- Debug issues instantly.

Pricing:

Visit the HoneyHive website for pricing details.

Integrations:

- OpenTelemetry

- Git

Where can I use HoneyHive?

HoneyHive is used by a wide range of companies from startups to Fortune 100 enterprises for various applications including personalized e-commerce, and more.

AI Research and Paper Tools Machine Learning and Deep Learning Tools AI Datasets and APIs AI Model Training and Deployment

Best Alternative Tools to "HoneyHive"

LLMOps Space is a global community for LLM practitioners. Focused on content, discussions, and events related to deploying Large Language Models into production.

Portkey equips AI teams with a production stack: Gateway, Observability, Guardrails, Governance, and Prompt Management in one platform.

Langbase is a serverless AI developer platform that allows you to build, deploy, and scale AI agents with memory and tools. It offers a unified API for 250+ LLMs and features like RAG, cost prediction and open-source AI agents.

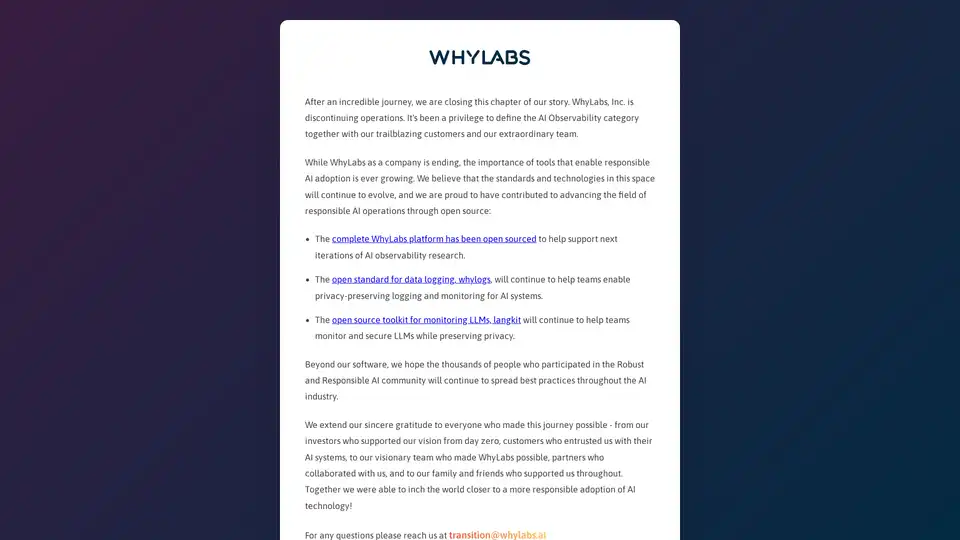

WhyLabs provides AI observability, LLM security, and model monitoring. Guardrail Generative AI applications in real-time to mitigate risks.