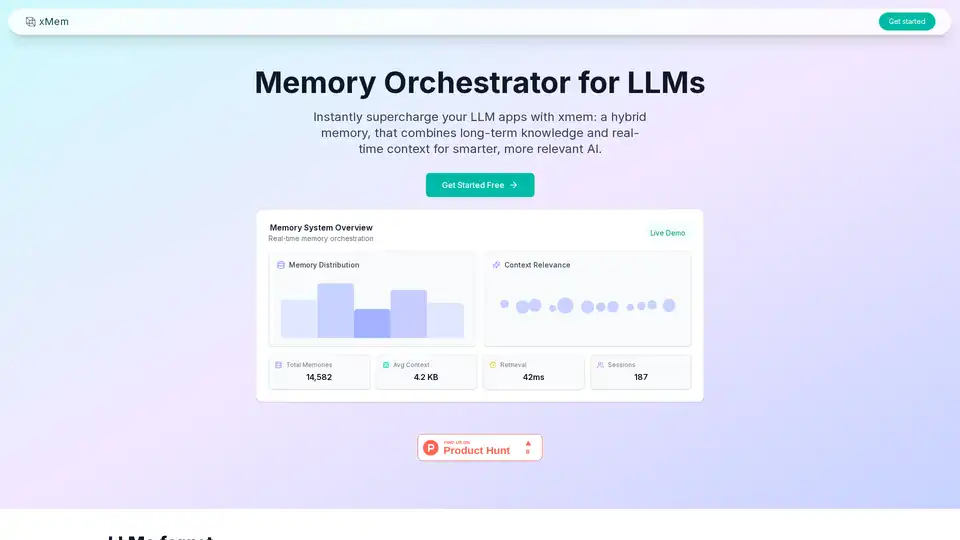

xMem

Overview of xMem

What is xMem?

xMem is a memory orchestrator for LLMs (Large Language Models) that combines long-term knowledge and real-time context to create smarter and more relevant AI applications.

How to Use xMem?

Integrate xMem into your LLM application using the API or dashboard. xMem automatically assembles the best context for every LLM call, eliminating the need for manual tuning.

const orchestrator = new xmem({

vectorStore: chromadb,

sessionStore: in-memory,

llmProvider: mistral

});

const response = await orchestrator.query({

input: "Tell me about our previous discussion"

});

Why is xMem important?

LLMs often forget information between sessions, leading to a poor user experience. xMem addresses this by providing persistent memory for every user, ensuring that the AI is always relevant, accurate, and up-to-date.

Key Features:

- Long-Term Memory: Store and retrieve knowledge, notes, and documents with vector search.

- Session Memory: Track recent chats, instructions, and context for recency and personalization.

- RAG Orchestration: Automatically assemble the best context for every LLM call. No manual tuning needed.

- Knowledge Graph: Visualize connections between concepts, facts, and user context in real-time.

Benefits:

- Never lose knowledge or context between sessions.

- Boost LLM accuracy with orchestrated context.

- Works with any open-source LLM and vector DB.

- Easy API and dashboard for seamless integration and monitoring.

AI Task and Project Management AI Document Summarization and Reading AI Smart Search AI Data Analysis Automated Workflow

Best Alternative Tools to "xMem"

Langbase is a serverless AI developer platform that allows you to build, deploy, and scale AI agents with memory and tools. It offers a unified API for 250+ LLMs and features like RAG, cost prediction and open-source AI agents.

vLLM is a high-throughput and memory-efficient inference and serving engine for LLMs, featuring PagedAttention and continuous batching for optimized performance.

Supermemory is a fast Memory API and Router that adds long-term memory to your LLM apps. Store, recall, and personalize in milliseconds using the Supermemory SDK and MCP.

Agents-Flex is a simple and lightweight LLM application development framework developed in Java, similar to LangChain.