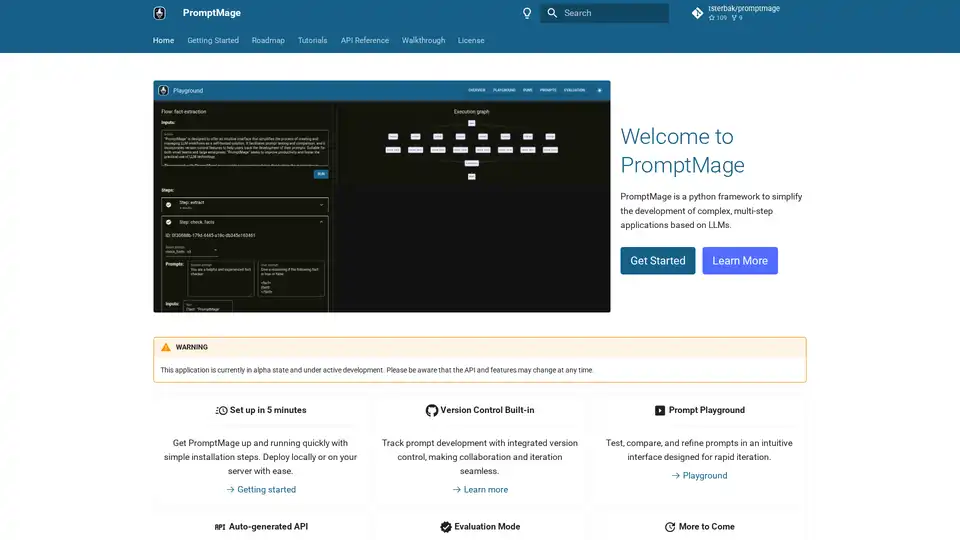

PromptMage

Overview of PromptMage

PromptMage: Simplifying LLM Application Development

What is PromptMage? PromptMage is a Python framework designed to streamline the development of complex, multi-step applications based on Large Language Models (LLMs). It provides an intuitive interface for creating and managing LLM workflows, making it a valuable self-hosted solution for developers, researchers, and organizations.

Key Features and Benefits:

- Simplified LLM Workflow Management: PromptMage simplifies the creation and management of LLM workflows with an intuitive interface.

- Prompt Testing and Comparison: Facilitates prompt testing and comparison, allowing users to refine prompts for optimal performance.

- Version Control: Incorporates version control features, enabling users to track the development of their prompts and collaborate effectively.

- Auto-Generated API: Leverages a FastAPI-powered, automatically created API for easy integration and deployment.

- Evaluation Mode: Assesses prompt performance through manual and automatic testing, ensuring reliability before deployment.

- Rapid Iteration: The prompt playground allows for quick testing, comparison, and refinement of prompts.

- Seamless Collaboration: Integrated version control makes collaboration and iteration seamless.

- Easy Integration and Deployment: An automatically created API simplifies integration and deployment.

How does PromptMage work?

PromptMage works by providing a set of tools and features that simplify the process of developing and managing LLM-based applications. These include:

- Prompt Playground: A web interface for testing and comparing prompts.

- Version Control: A system for tracking changes to prompts over time.

- API Generation: A tool for automatically generating APIs from prompts.

- Evaluation Mode: A system for evaluating the performance of prompts.

Core Functionality:

- Prompt Playground Integration: Seamlessly integrate the prompt playground into your workflow for fast iteration.

- Prompts as First-Class Citizens: Treat prompts as first-class citizens with version control and collaboration features.

- Manual and Automatic Testing: Validate prompts through manual and automatic testing.

- Easy Sharing: Share results easily with domain experts and stakeholders.

- FastAPI API: Build-in, automatically created API with FastAPI for easy integration and deployment.

- Type Hinting: Utilize type-hinting for automatic inference and validation magic.

Who is PromptMage for?

PromptMage is suitable for:

- Developers building LLM-powered applications

- Researchers experimenting with LLMs

- Organizations seeking to streamline their LLM workflows

Use cases

- product-review-research: An AI webapp build with PromptMage to provide in-depth analysis for products by researching trustworthy online reviews.

Getting Started

To get started with PromptMage, you can follow these steps:

- Install PromptMage using pip:

pip install promptmage - Explore the documentation and tutorials to learn how to use the framework.

- Start building your LLM application!

Why choose PromptMage?

PromptMage is a pragmatic solution that bridges the current gap in LLM workflow management. It empowers developers, researchers, and organizations by making LLM technology more accessible and manageable, thereby supporting the next wave of AI innovations.

By using PromptMage, you can:

- Increase productivity

- Improve the quality of your LLM applications

- Facilitate collaboration

- Accelerate innovation

Contributing to PromptMage

The PromptMage project welcomes contributions from the community. If you're interested in improving PromptMage, you can contribute in the following ways:

- Reporting Bugs: Submit an issue in our repository, providing a detailed description of the problem and steps to reproduce it.

- Improve documentation: If you find any errors or have suggestions for improving the documentation, please submit an issue or a pull request.

- Fixing Bugs: Check out our list of open issues and submit a pull request to fix any bugs you find.

- Feature Requests: Have ideas on how to make PromptMage better? We'd love to hear from you! Please submit an issue, detailing your suggestions.

- Pull Requests: Contributions via pull requests are highly appreciated. Please ensure your code adheres to the coding standards of the project, and submit a pull request with a clear description of your changes.

For more information or inquiries, you can contact the project maintainers at promptmage@tobiassterbak.com.

AI Programming Assistant Auto Code Completion AI Code Review and Optimization AI Low-Code and No-Code Development

Best Alternative Tools to "PromptMage"

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Lunary is an open-source LLM engineering platform providing observability, prompt management, and analytics for building reliable AI applications. It offers tools for debugging, tracking performance, and ensuring data security.

Parea AI is the ultimate experimentation and human annotation platform for AI teams, enabling seamless LLM evaluation, prompt testing, and production deployment to build reliable AI applications.

Parea AI is an AI experimentation and annotation platform that helps teams confidently ship LLM applications. It offers features for experiment tracking, observability, human review, and prompt deployment.