Mercury

Overview of Mercury

Mercury: Revolutionizing AI with Diffusion LLMs

What is Mercury? Mercury, developed by Inception, represents a new era in Large Language Models (LLMs) by leveraging diffusion technology. These diffusion LLMs (dLLMs) offer significant advantages in speed, efficiency, accuracy, and controllability compared to traditional auto-regressive LLMs.

How does Mercury work?

Unlike conventional LLMs that generate text sequentially, one token at a time, Mercury's dLLMs generate tokens in parallel. This parallel processing dramatically increases speed and optimizes GPU efficiency, making it ideal for real-time AI applications.

Key Features and Benefits:

- Blazing Fast Inference: Experience ultra-low latency, enabling responsive AI interactions.

- Frontier Quality: Benefit from high accuracy and controllable text generation.

- Cost-Effective: Reduce operational costs with maximized GPU efficiency.

- OpenAI API Compatible: Seamlessly integrate Mercury into existing workflows as a drop-in replacement for traditional LLMs.

- Large Context Window: Both Mercury Coder and Mercury support a 128K context window.

AI Applications Powered by Mercury:

Mercury's speed and efficiency unlock a wide range of AI applications:

- Coding: Accelerate coding workflows with lightning-fast autocomplete, tab suggestions, and editing.

- Voice: Deliver responsive voice experiences in customer service, translation, and sales.

- Search: Instantly surface relevant data from any knowledge base, minimizing research time.

- Agents: Run complex multi-turn systems while maintaining low latency.

Mercury Models:

- Mercury Coder: Optimized for coding workflows, supporting streaming, tool use, and structured output. Pricing: Input $0.25 | Output $1 per 1M tokens.

- Mercury: General-purpose dLLM providing ultra-low latency, also supporting streaming, tool use, and structured output. Pricing: Input $0.25 | Output $1 per 1M tokens.

Why choose Mercury?

Testimonials from industry professionals highlight Mercury's exceptional speed and impact:

- Jacob Kim, Software Engineer: "I was amazed by how fast it was. The multi-thousand tokens per second was absolutely wild, nothing like I've ever seen."

- Oliver Silverstein, CEO: "After trying Mercury, it's hard to go back. We are excited to roll out Mercury to support all of our voice agents."

- Damian Tran, CEO: "We cut routing and classification overheads to sub-second latencies even on complex agent traces."

Who is Mercury for?

Mercury is designed for enterprises seeking to:

- Enhance AI application performance.

- Reduce AI infrastructure costs.

- Gain a competitive edge with cutting-edge AI technology.

How to integrate Mercury:

Mercury is available through major cloud providers like AWS Bedrock and Azure Foundry. It's also accessible via platforms like OpenRouter and Quora. You can start with their API.

To explore fine-tuning, private deployments, and forward-deployed engineering support, contact Inception.

Mercury offers a transformative approach to AI, making it faster, more efficient, and more accessible for a wide range of applications. Try the Mercury API today and experience the next generation of AI.

AI Programming Assistant Auto Code Completion AI Code Review and Optimization AI Low-Code and No-Code Development

Best Alternative Tools to "Mercury"

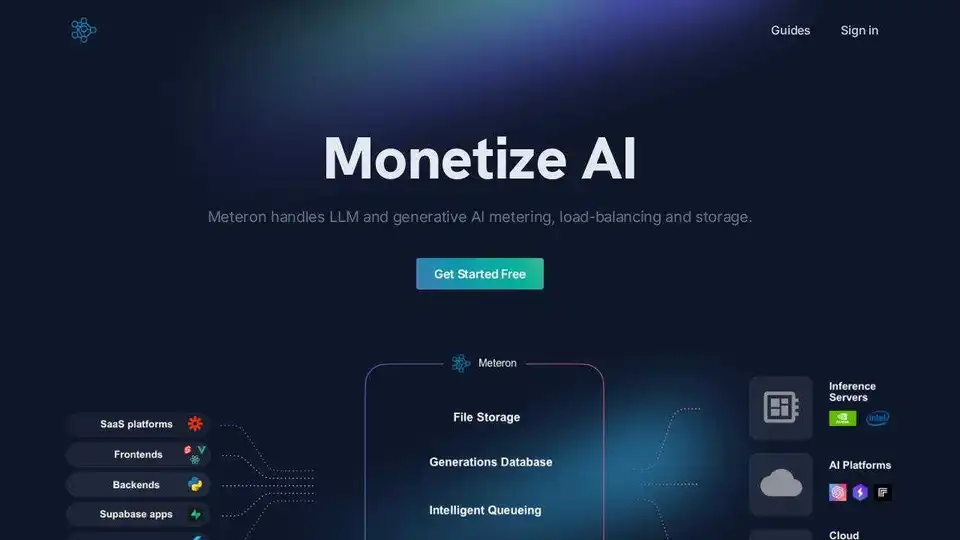

Meteron AI is an all-in-one AI toolset that handles LLM and generative AI metering, load-balancing, and storage, freeing developers to focus on building AI-powered products.

Chat with AI using your API keys. Pay only for what you use. GPT-4, Gemini, Claude, and other LLMs supported. The best chat LLM frontend UI for all AI models.

PromptBuilder is an AI prompt engineering platform designed to help users generate, optimize, and organize high-quality prompts for various AI models like ChatGPT, Claude, and Gemini, ensuring consistent and effective AI outputs.

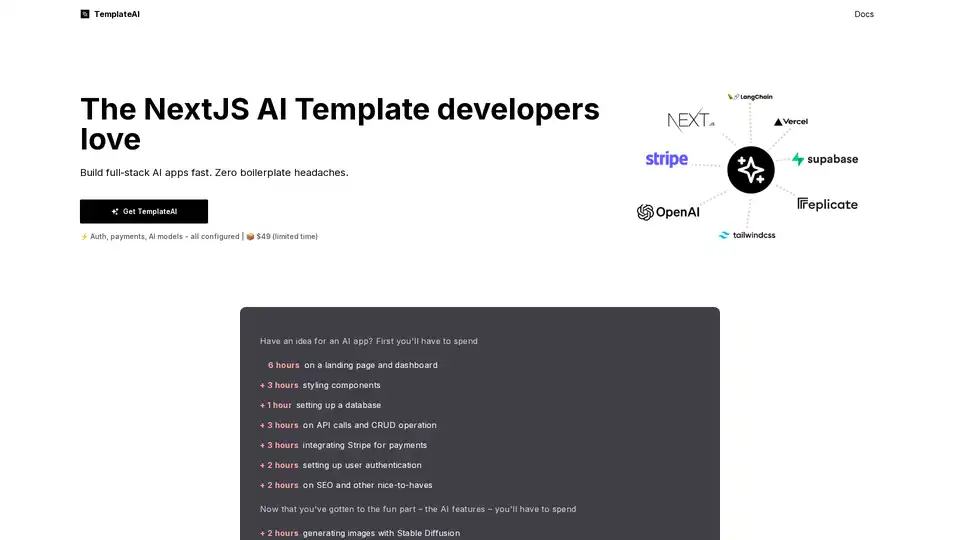

TemplateAI is the leading NextJS template for AI apps, featuring Supabase auth, Stripe payments, OpenAI/Claude integration, and ready-to-use AI components for fast full-stack development.