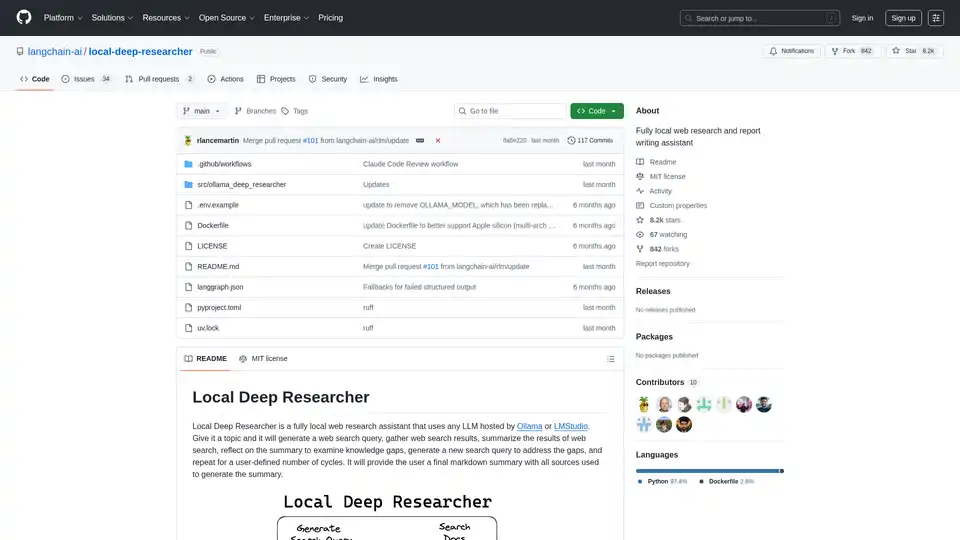

Local Deep Researcher

Overview of Local Deep Researcher

What is Local Deep Researcher?

Local Deep Researcher is an innovative open-source web research assistant designed to operate entirely locally on your machine. This powerful tool leverages large language models (LLMs) through either Ollama or LMStudio to conduct comprehensive web research and generate detailed reports with proper source citations.

How Does Local Deep Researcher Work?

The system follows an intelligent iterative research process:

Research Cycle Process:

- Query Generation: Given a user-provided topic, the local LLM generates an optimized web search query

- Source Retrieval: Uses configured search tools (DuckDuckGo, SearXNG, Tavily, or Perplexity) to find relevant online sources

- Content Summarization: The LLM analyzes and summarizes the findings from the web search results

- Gap Analysis: The system reflects on the summary to identify knowledge gaps and missing information

- Iterative Refinement: Generates new search queries to address identified gaps and repeats the process

- Final Report Generation: After multiple cycles (configurable by the user), produces a comprehensive markdown report with all sources properly cited

Core Features and Capabilities

- Fully Local Operation: All processing happens locally, ensuring data privacy and security

- Multiple LLM Support: Compatible with any LLM hosted through Ollama or LMStudio

- Flexible Search Integration: Supports DuckDuckGo (default), SearXNG, Tavily, and Perplexity search APIs

- Configurable Research Depth: Users can set the number of research cycles (default: 3 iterations)

- Structured Output: Generates well-formatted markdown reports with proper source citations

- Visual Workflow Monitoring: Integrated with LangGraph Studio for real-time process visualization

Technical Requirements and Setup

Supported Platforms:

- macOS (recommended)

- Windows

- Linux via Docker

Required Components:

- Python 3.11+

- Ollama or LMStudio for local LLM hosting

- Optional API keys for premium search services

Installation and Configuration

Quick Setup Process:

- Clone the repository from GitHub

- Configure environment variables in the .env file

- Select your preferred LLM provider (Ollama or LMStudio)

- Choose search API configuration

- Launch through LangGraph Studio

Docker Deployment: The project includes Docker support for containerized deployment, though Ollama must run separately with proper network configuration.

Model Compatibility Considerations

The system requires LLMs capable of structured JSON output. Some models like DeepSeek R1 (7B and 1.5B) may have limitations with JSON mode, but the assistant includes fallback mechanisms to handle these cases.

Who Should Use Local Deep Researcher?

Ideal Users Include:

- Researchers and Academics needing comprehensive literature reviews

- Content Creators requiring well-researched background information

- Students working on research papers and assignments

- Journalists conducting investigative research

- Business Professionals needing market research and competitive analysis

- Privacy-Conscious Users who prefer local processing over cloud-based solutions

Practical Applications and Use Cases

- Academic Research: Conduct literature reviews and gather sources for papers

- Market Analysis: Research competitors and industry trends

- Content Research: Gather information for blog posts, articles, and reports

- Due Diligence: Investigate topics thoroughly with proper source documentation

- Learning and Education: Explore topics in depth with automated research assistance

Why Choose Local Deep Researcher?

Key Advantages:

- Complete Privacy: Your research topics and data never leave your local machine

- Cost-Effective: No API costs for basic search functionality

- Customizable: Adjust research depth and sources to match your specific needs

- Transparent: Full visibility into the research process and sources used

- Open Source: Community-driven development and continuous improvements

Getting the Best Results

For optimal performance:

- Use larger, more capable LLM models when possible

- Configure appropriate search APIs for your specific needs

- Adjust the number of research cycles based on topic complexity

- Review and verify important sources manually for critical research

Local Deep Researcher represents a significant advancement in local AI-powered research tools, combining the power of large language models with practical web research capabilities while maintaining complete data privacy and control.

AI Task and Project Management AI Document Summarization and Reading AI Smart Search AI Data Analysis Automated Workflow

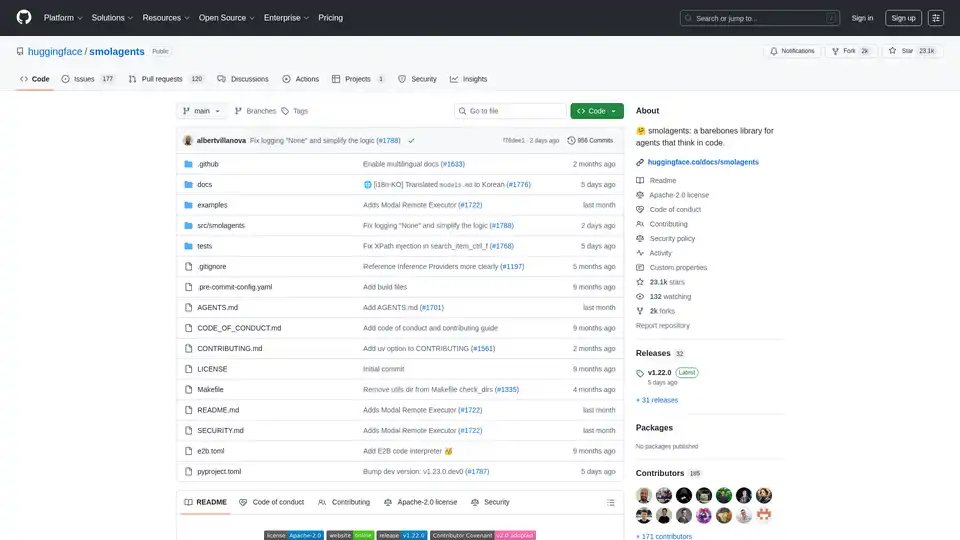

Best Alternative Tools to "Local Deep Researcher"

Smolagents is a minimalistic Python library for creating AI agents that reason and act through code. It supports LLM-agnostic models, secure sandboxes, and seamless Hugging Face Hub integration for efficient, code-based agent workflows.

EnConvo is an AI Agent Launcher for macOS, revolutionizing productivity with instant access and workflow automation. Features 150+ built-in tools, MCP support, and AI Agent mode.

Transform your workflow with BrainSoup! Create custom AI agents to handle tasks and automate processes through natural language. Enhance AI with your data while prioritizing privacy and security.

Load CSV and analyze it in a visual step-by-step interface. Cleanup, extract, summarize, or make sentiment analysis with your personal AI agent.