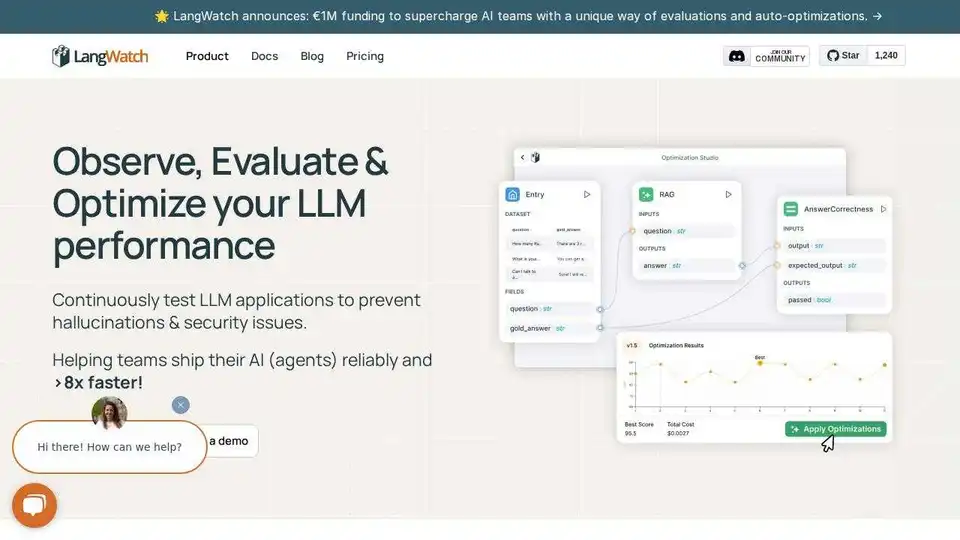

LangWatch

Overview of LangWatch

LangWatch: AI Agent Testing and LLM Evaluation Platform

LangWatch is an open-source platform designed for AI agent testing, LLM evaluation, and LLM observability. It helps teams simulate AI agents, track responses, and catch failures before they impact production.

Key Features:

- Agent Simulation: Test AI agents with simulated users to catch edge cases and prevent regressions.

- LLM Evaluation: Evaluate the performance of LLMs with built-in tools for data selection and testing.

- LLM Observability: Track responses and debug issues in your production AI.

- Framework Flexible: Works with any LLM app, agent framework, or model.

- OpenTelemetry Native: Integrates with all LLMs & AI agent frameworks.

- Self-Hosted: Fully open-source; run locally or self-host.

How to Use LangWatch:

- Build: Design smarter agents with evidence, not guesswork.

- Evaluate: Use built-in tools for data selection, evaluation, and testing.

- Deploy: Reduce rework, manage regressions, and build trust in your AI.

- Monitor: Track responses and catch failures before production.

- Optimize: Collaborate with your entire team to run experiments, evaluate datasets, and manage prompts and flows.

Integrations:

LangWatch integrates with various frameworks and models, including:

- Python

- Typescript

- OpenAI agents

- LiteLLM

- DSPy

- LangChain

- Pydantic AI

- AWS BedRock

- Agno

- Crew AI

Is LangWatch Right for You?

LangWatch is suitable for AI Engineers, Data Scientists, Product Managers, and Domain Experts who want to collaborate on building better AI agents.

FAQ:

- How does LangWatch work?

- What is LLM observability?

- What are LLM evaluations?

- Is LangWatch self-hosted available?

- How does LangWatch compare to Langfuse or LangSmith?

- What models and frameworks does LangWatch support and how do I integrate?

- Can I try LangWatch for free?

- How does LangWatch handle security and compliance?

- **How can I contribute to the project?

LangWatch helps you ship agents with confidence. Get started in as little as 5 minutes.

AI Research and Paper Tools Machine Learning and Deep Learning Tools AI Datasets and APIs AI Model Training and Deployment

Best Alternative Tools to "LangWatch"

Elixir is an AI Ops and QA platform designed for monitoring, testing, and debugging AI voice agents. It offers automated testing, call review, and LLM tracing to ensure reliable performance.

Maxim AI is an end-to-end evaluation and observability platform that helps teams ship AI agents reliably and 5x faster with comprehensive testing, monitoring, and quality assurance tools.

Future AGI is a unified LLM observability and AI agent evaluation platform that helps enterprises achieve 99% accuracy in AI applications through comprehensive testing, evaluation, and optimization tools.

Future AGI offers a unified LLM observability and AI agent evaluation platform for AI applications, ensuring accuracy and responsible AI from development to production.