fal.ai

Overview of fal.ai

What is fal.ai?

fal.ai is a generative media platform designed for developers, offering a wide range of AI models for image, video, and audio generation. It provides developers with the easiest and most cost-effective way to integrate generative AI into their applications.

Key Features:

- Extensive Model Gallery: Access over 600 production-ready image, video, audio, and 3D models.

- Serverless GPUs: Run inference at lightning speed with fal's globally distributed serverless engine. No GPU configuration or autoscaling setup required.

- Unified API and SDKs: Use a simple API and SDKs to call hundreds of open models or your own LoRAs in minutes.

- Dedicated Clusters: Spin up dedicated compute to fine-tune, train, or run custom models with guaranteed performance.

- Fastest Inference Engine: fal Inference Engine™ is up to 10x faster.

How to use fal.ai?

- Explore Models: Choose from a rich library of models for image, video, voice, and code generation.

- Call API: Access the models using a simple API. No fine-tuning or setup needed.

- Deploy Models: Deploy private or fine-tuned models with one click.

- Utilize Serverless GPUs: Accelerate your workloads with fal Inference Engine.

Why choose fal.ai?

- Speed: Fastest inference engine for diffusion models.

- Scalability: Scale from prototype to 100M+ daily inference calls.

- Ease of Use: Unified API and SDKs for easy integration.

- Flexibility: Deploy private or fine-tuned models with one click.

- Enterprise-Grade: SOC 2 compliant and ready for enterprise procurement processes.

Where can I use fal.ai?

fal.ai is used by developers and leading companies to power AI features in various applications, including:

- Image and Video Search: Used by Perplexity to scale generative media efforts.

- Text-to-Speech Infrastructure: Used by PlayAI to provide near-instant voice responses.

- Image and Video Generation Bots: Used by Quora to power Poe's official bots.

import { fal } from "@fal-ai/client";

const result = await fal.subscribe("fal-ai/fast-sdxl", {

input: {

prompt: "photo of a cat wearing a kimono"

},

logs: true,

onQueueUpdate: (update) => {

if (update.status === "IN_PROGRESS") {

update.logs.map((log) => log.message).forEach(console.log);

}

},

});

AI Video Generation AI Video Editing AI Motion Capture and Animation AI Virtual Human and Digital Avatar 3D Video Generation

Best Alternative Tools to "fal.ai"

Cloudflare Workers AI allows you to run serverless AI inference tasks on pre-trained machine learning models across Cloudflare's global network, offering a variety of models and seamless integration with other Cloudflare services.

Explore NVIDIA NIM APIs for optimized inference and deployment of leading AI models. Build enterprise generative AI applications with serverless APIs or self-host on your GPU infrastructure.

Cerebrium is a serverless AI infrastructure platform simplifying the deployment of real-time AI applications with low latency, zero DevOps, and per-second billing. Deploy LLMs and vision models globally.

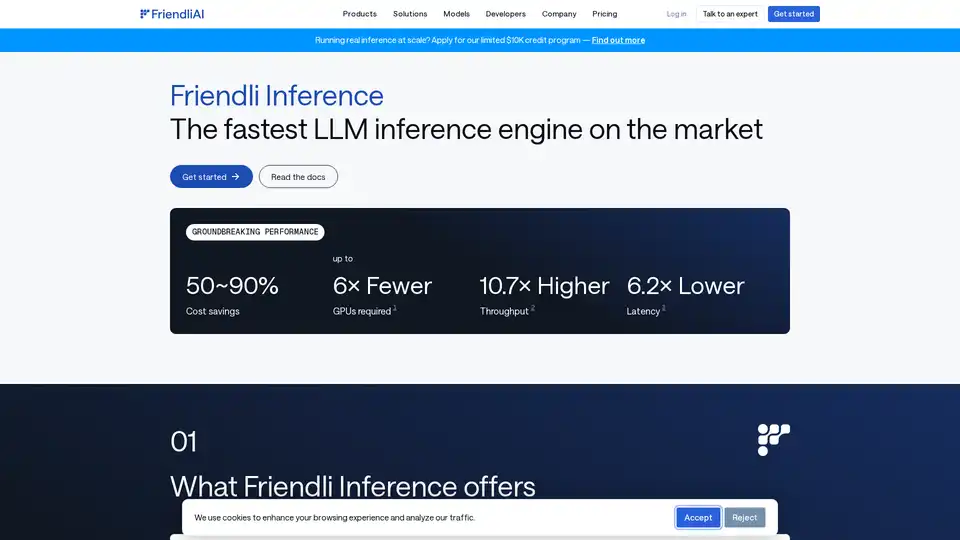

Friendli Inference is the fastest LLM inference engine, optimized for speed and cost-effectiveness, slashing GPU costs by 50-90% while delivering high throughput and low latency.