dstack

Overview of dstack

What is dstack?

dstack is an open-source AI container orchestration engine designed to streamline the development, training, and inference processes for machine learning (ML) teams. It offers a unified control plane for GPU provisioning and orchestration across various environments, including cloud, Kubernetes, and on-premises infrastructure. By reducing costs and preventing vendor lock-in, dstack empowers ML teams to focus on research and development rather than infrastructure management.

How does dstack work?

dstack operates as an orchestration layer that simplifies the management of AI infrastructure. It integrates natively with top GPU clouds, automating cluster provisioning and workload orchestration. It also supports Kubernetes and SSH fleets for connecting to on-premises clusters. Key functionalities include:

- GPU Orchestration: Efficiently manages GPU resources across different environments.

- Dev Environments: Enables easy connection of desktop IDEs to powerful cloud or on-premises GPUs.

- Scalable Service Endpoints: Facilitates the deployment of models as secure, auto-scaling, OpenAI-compatible endpoints.

dstack is compatible with any hardware, open-source tools, and frameworks, offering flexibility and avoiding vendor lock-in.

Key Features of dstack

- Unified Control Plane: Provides a single interface for managing GPU resources across different environments.

- Native Integration with GPU Clouds: Automates cluster provisioning and workload orchestration with leading GPU cloud providers.

- Kubernetes and SSH Fleet Support: Connects to on-premises clusters using Kubernetes or SSH fleets.

- Dev Environments: Simplifies the development loop by allowing connection to cloud or on-premises GPUs.

- Scalable Service Endpoints: Deploys models as secure, auto-scaling endpoints compatible with OpenAI.

- Single-Node & Distributed Tasks: Supports both single-instance experiments and multi-node distributed training.

Why Choose dstack?

dstack offers several compelling benefits for ML teams:

- Cost Reduction: Reduces infrastructure costs by 3-7x through efficient resource utilization.

- Vendor Lock-in Prevention: Works with any hardware, open-source tools, and frameworks.

- Simplified Infrastructure Management: Automates cluster provisioning and workload orchestration.

- Improved Development Workflow: Streamlines the development loop with easy-to-use dev environments.

According to user testimonials:

- Wah Loon Keng, Sr. AI Engineer @Electronic Arts: "With dstack, AI researchers at EA can spin up and scale experiments without touching infrastructure."

- Aleksandr Movchan, ML Engineer @Mobius Labs: "Thanks to dstack, my team can quickly tap into affordable GPUs and streamline our workflows from testing and development to full-scale application deployment."

How to use dstack?

- Installation: Install dstack via

uv tool install "dstack[all]". - Setup: Set up backends or SSH fleets.

- Team Addition: Add your team to the dstack environment.

dstack can be deployed anywhere with the dstackai/dstack Docker image.

Who is dstack for?

dstack is ideal for:

- ML teams looking to optimize GPU resource utilization.

- Organizations seeking to reduce infrastructure costs.

- AI researchers requiring scalable and flexible environments for experimentation.

- Engineers aiming to streamline their ML development workflow.

Best way to orchestrate AI containers?

dstack stands out as a premier solution for AI container orchestration, offering a seamless, efficient, and cost-effective approach to managing GPU resources across diverse environments. Its compatibility with Kubernetes, SSH fleets, and native integration with top GPU clouds makes it a versatile choice for any ML team aiming to enhance productivity and reduce infrastructure overhead.

AI Programming Assistant Auto Code Completion AI Code Review and Optimization AI Low-Code and No-Code Development

Best Alternative Tools to "dstack"

SaladCloud offers affordable, secure, and community-driven distributed GPU cloud for AI/ML inference. Save up to 90% on compute costs. Ideal for AI inference, batch processing, and more.

Denvr Dataworks provides high-performance AI compute services, including on-demand GPU cloud, AI inference, and a private AI platform. Accelerate your AI development with NVIDIA H100, A100 & Intel Gaudi HPUs.

Nebius is an AI cloud platform designed to democratize AI infrastructure, offering flexible architecture, tested performance, and long-term value with NVIDIA GPUs and optimized clusters for training and inference.

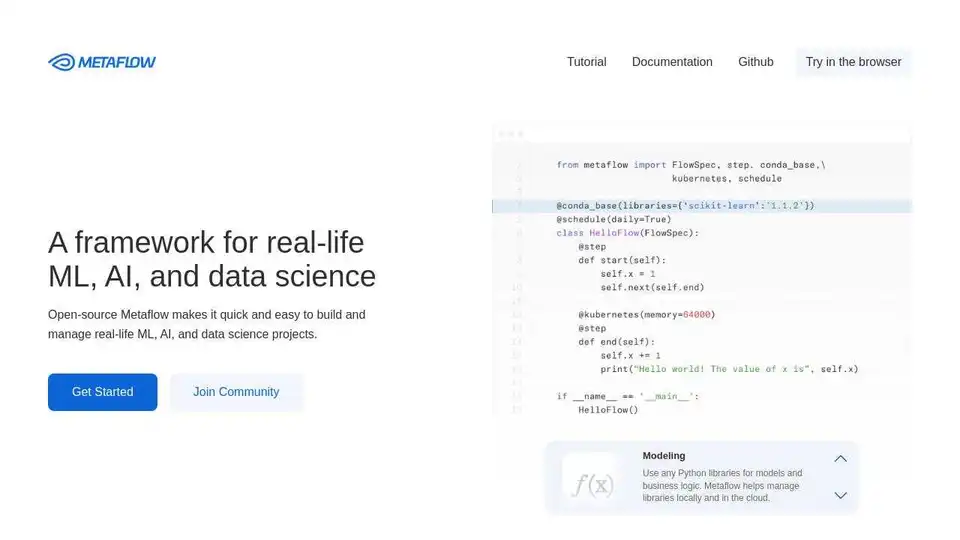

Metaflow is an open-source framework by Netflix for building and managing real-life ML, AI, and data science projects. Scale workflows, track experiments, and deploy to production easily.